Factor graph

In probability theory and its applications, a factor graph is a particular type of graphical model, with applications in Bayesian inference, that enables efficient computation of marginal distributions through the sum-product algorithm. One of the important success stories of factor graphs and the sum-product algorithm is the decoding of capacity-approaching error-correcting codes, such as LDPC and turbo codes.

A factor graph is an example of a hypergraph, in that an arrow (i.e., a factor node) can connect more than one (normal) node

Contents |

Definition

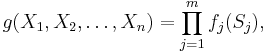

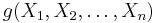

A factor graph is a bipartite graph representing the factorization of a function. Given a factorization of a function  ,

,

where  , the corresponding factor graph

, the corresponding factor graph  consists of variable vertices

consists of variable vertices  , factor vertices

, factor vertices  , and edges

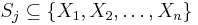

, and edges  . The edges depend on the factorization as follows: there is an undirected edge between factor vertex

. The edges depend on the factorization as follows: there is an undirected edge between factor vertex  and variable vertex

and variable vertex  when

when  . The function is tacitly assumed to be real-valued:

. The function is tacitly assumed to be real-valued:  .

.

Factor graphs can be combined with message passing algorithms to efficiently compute certain characteristics of the function  , such as the marginal distributions.

, such as the marginal distributions.

Examples

Consider a function that factorizes as follows:

,

,

with a corresponding factor graph shown on the right. Observe that the factor graph has a cycle. If we merge  into a single factor, the resulting factor graph will be a tree. This is an important distinction, as message passing algorithms are usually exact for trees, but only approximate for graphs with cycles.

into a single factor, the resulting factor graph will be a tree. This is an important distinction, as message passing algorithms are usually exact for trees, but only approximate for graphs with cycles.

Message passing on factor graphs

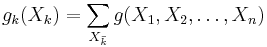

A popular message passing algorithm on factor graphs is the sum-product algorithm, which efficiently computes all the marginals of the individual variables of the function. In particular, the marginal of variable  is defined as

is defined as

where the notation  means that the summation goes over all the variables, except

means that the summation goes over all the variables, except  . The messages of the sum-product algorithm are conceptually computed in the vertices and passed along the edges. A message from or to a variable vertex is always a function of that particular variable. For instance, when a variable is binary, the messages over the edges incident to the corresponding vertex can be represented as vectors of length 2: the first entry is the message evaluated in 0, the second entry is the message evaluated in 1. When a variable belongs to the field of real numbers, messages can be arbitrary functions, and special care needs to be taken in their representation.

. The messages of the sum-product algorithm are conceptually computed in the vertices and passed along the edges. A message from or to a variable vertex is always a function of that particular variable. For instance, when a variable is binary, the messages over the edges incident to the corresponding vertex can be represented as vectors of length 2: the first entry is the message evaluated in 0, the second entry is the message evaluated in 1. When a variable belongs to the field of real numbers, messages can be arbitrary functions, and special care needs to be taken in their representation.

In practice, the sum-product algorithm is used for statistical inference, whereby  is a joint distribution or a joint likelihood function, and the factorization depends on the conditional independencies among the variables.

is a joint distribution or a joint likelihood function, and the factorization depends on the conditional independencies among the variables.

The Hammersley–Clifford theorem shows that other probabilistic models such as Markov networks and Bayesian networks can be represented as factor graphs; the latter representation is frequently used when performing inference over such networks using belief propagation. On the other hand, Bayesian networks are more naturally suited for generative models, as they can directly represent the causalities of the model.

See also

- Belief propagation

- Bayesian inference

- Conditional probability

- Markov network

- Bayesian network

- Hammersley–Clifford theorem

External links

- A tutorial-style dissertation by Volker Koch

- An Introduction to Factor Graphs by Hans-Andrea Loeliger, IEEE Signal Processing Magazine, January 2004, pp. 28–41.

References

- Clifford (1990), "Markov random fields in statistics", in Grimmett, G.R.; Welsh, D.J.A., Disorder in Physical Systems, J.M. Hammersley Festschrift, Oxford University Press, pp. 19–32, http://www.statslab.cam.ac.uk/~grg/books/hammfest/3-pdc.ps

- Frey, Brendan J. (2003), "Extending Factor Graphs so as to Unify Directed and Undirected Graphical Models", in jain, Nitin, UAI'03, Proceedings of the 19th Conference in Uncertainty in Artificial Intelligence, August 7–10, Acapulco, Mexico, Morgan Kaufmann, pp. 257–264

- Kschischang, Frank R.; Brendan J. Frey and Hans-Andrea Loeliger (2001), "Factor Graphs and the Sum-Product Algorithm", IEEE Transactions on Information Theory 47 (2): 498–519, doi:10.1109/18.910572, http://citeseer.ist.psu.edu/kschischang01factor.html, retrieved 2008-02-06.

- Wymeersch, Henk (2007), Iterative Receiver Design, Cambridge University Press, ISBN 0521873150, http://www.cambridge.org/us/catalogue/catalogue.asp?isbn=9780521873154